- Welcome to Fantasies Attic.

Halloween Gift Page

Halloween Gathering has begun! You can read all about it HERE.

Participants:

in my little paws

Petege

Aelin

Llola Lane

emanuela1

Hipshot

prae

Nemesis

BoReddington

EarwenM

panthia

sanbie

GlassyLady

shadow_dancer

Disparate Dreamer

Halloween is coming!

McGrandpa

2025-10-10, 01:04:27Hey Zeus FX, welcome back!Great job to Dark Angel, she swatted the heck outta some gremlins!

Zeus Fx

2025-10-09, 13:07:22Hello everyone. It is good to be back

Hipshot

2025-10-02, 08:51:51 Sounds like the gremlins have once again broken loose. Think we need to open the industrial microwaves.

Sounds like the gremlins have once again broken loose. Think we need to open the industrial microwaves.

Skhilled

2025-10-01, 18:54:22Okey, dokey. You know how to find me, if you need me.

DarkAngel

2025-10-01, 17:18:59nopers just lost a bit

Skhilled

2025-09-30, 20:07:14DA, Are you still locked out?

DarkAngel

2025-09-29, 15:34:23Hope site behaves for a bit.

McGrandpa

2025-09-29, 14:04:22Don't sound so good, Mary!

McGrandpa

2025-09-29, 14:03:44My EYES! My EYES! Light BRIGHT Light BRIGHT!

DarkAngel

2025-09-27, 17:10:12I locked me out of admin it would seem lol

Vote for our site daily by CLICKING this image:

Awards are emailed when goals are reached:

Platinum= 10,000 votes

Gold= 5,000 votes

Silver= 2,500 votes

Bronze= 1,000 votes

Pewter= 300 votes

Copper= 100 Votes

Platinum= 10,000 votes

Gold= 5,000 votes

Silver= 2,500 votes

Bronze= 1,000 votes

Pewter= 300 votes

Copper= 100 Votes

2025 awards

.

.

2024 awards

Current thread located within.

All donations are greatly needed, appreciated, and go to the Attic/Realms Server fees and upkeep

Thank you so much.

Thank you so much.

Main Stream

Asylum TOS

Asylum TOS Gallery How To

Gallery How To Forum Hints

Forum HintsThe Solarium

Our Gallery

Our Gallery Freebie Show Offs

Freebie Show OffsConservatory

Aelin's Fantastic Finds

Aelin's Fantastic Finds Anime and Manga

Anime and Manga Sponsors Showcase

Sponsors ShowcaseContest Wing

Contest Info

Contest Info Monthly Contests

Monthly Contests Weekly Contests

Weekly ContestsPoser Area

News and Updates

News and Updates Q & A

Q & A Tutorials

TutorialsConnect With Us!

Twitter

Twitter  FaceBook

FaceBook  DeviantArt

DeviantArt  Pinterest

Pinterest Banner Exchange

Banner ExchangeWeekly Winners SAOTW

Sister Hall

by Paul

TOTW

Little Drummer Boy

by Egzariuf

The prizes for both categories are:

Choose 1 item from the Fantasies Realm Market

AND

Choose 3 items from Sponsors Showcase

August 2025 Contest Winners

1st Place

Cheyenne Woman and her horse by WingedWolf

2nd Place

Waiting by Petege

September 2025 Contest Winners

1st Place

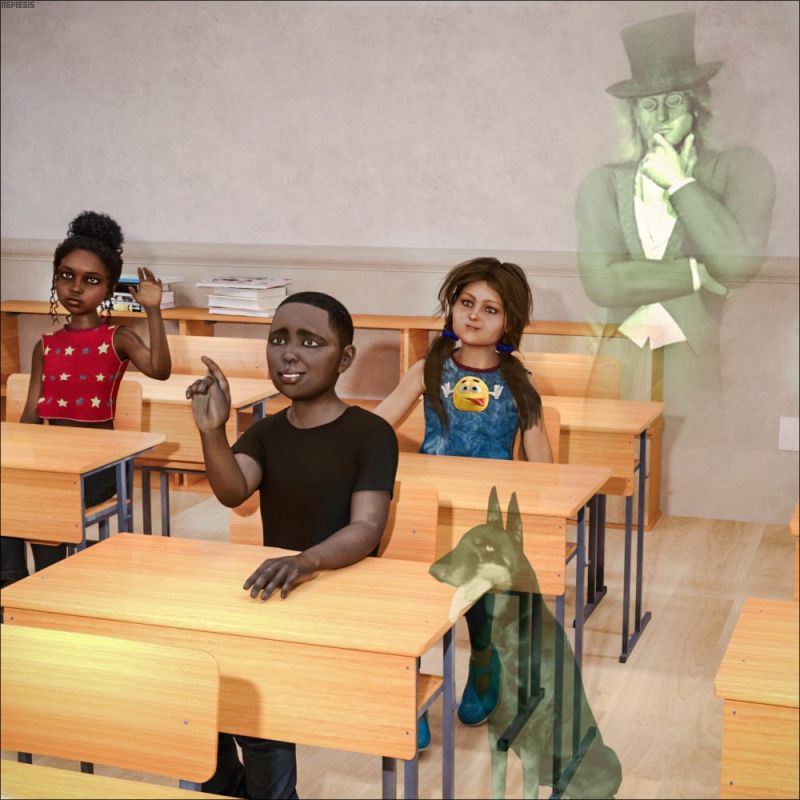

Nobody sees them but they're here... by Nemesis

2nd Place

Old Student Return by Emanuela1

| Jherrith | 62550 |

| Fafnir | 16702 |

| McGrandpa | 16110 |

| rrkknight3 | 8078 |

| Agent0013 | 7377 |

| M-Callahan | 6104 |

| FrahHawk | 6030 |

| Radkres | 5511 |

| Margy | 4811 |

| MarciaGomes | 4340 |

| Star4mation | 4017 |

| Dreamer | 3975 |

| Wizzard | 3548 |

| dRaCX | 3535 |

| Neimrok | 3492 |

| MilosGulan | 3418 |

| parkdalegardener | 3096 |

| Paul | 3070 |

| Noshoba | 3059 |

| sanbie | 2609 |

| RodS | 2602 |

| Katt | 2553 |

| Nemesis | 2150 |

| Twisted.Illusionz | 2045 |

| fruity | 1960 |

| Carolann | 1769 |

| HadCancer | 1747 |

| Scouseaphrenia | 1731 |

| deeleelaw57 | 1580 |

| Hipshot | 1457 |

| AmirA | 1416 |

| CalieVee | 1398 |

| Napalmarsenal | 1344 |

| AngellsGraphics | 1251 |

| Bea | 1157 |

| Heitaikai | 1063 |

| Burpee | 971 |

| heavenlee | 939 |

| Skhilled | 913 |

| Petege | 840 |

| ArtByMivan | 744 |

Members

- Total Members: 252

- Latest: onesidesamecoin

Stats

- Total Posts: 96,707

- Total Topics: 10,120

- Online today: 1,194

- Online ever: 5,532 (March 10, 2025, 02:26:56 AM)

Users Online

Giveaway of the Day

Is AI Stealing My Art?

Started by parkdalegardener, January 14, 2023, 02:07:44 PM

Previous topic - Next topic0 Members and 1 Guest are viewing this topic.

User actions